Why AI Models Are Failing in Non-Western Contexts?

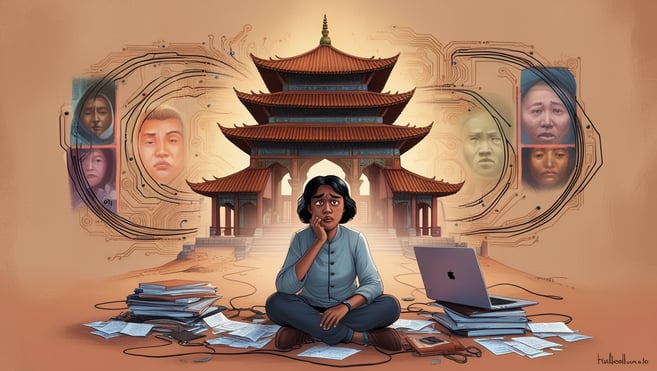

AI's Western bias limits its global impact. This blog explores how training data, design, and cultural gaps cause AI to fail in non-Western contexts, and proposes solutions for creating inclusive, equitable systems that benefit everyone worldwide.

Adheesh Soni

12/2/20243 min read

Why AI Models Are Failing in Non-Western Contexts

Introduction: One Size Does Not Fit All

Have you ever used a language translation app, only to realize that the output doesn’t quite capture the essence of what you meant? Or noticed that facial recognition systems struggle with accuracy when applied to diverse populations? These examples highlight a growing issue: AI systems often falter in non-Western contexts.

As someone deeply fascinated by AI's potential, I’ve found this issue both frustrating and eye-opening. How could technology designed to be universal end up being so exclusive? In this blog, I’ll explore why AI struggles in non-Western environments and why addressing these gaps is essential for a truly global AI future.

1. The Western-Centric Roots of AI

a. Training Data: A Narrow Worldview

AI models are only as good as the data they're trained on. Much of this data is sourced from Western countries, which means it predominantly reflects Western languages, cultures, and societal norms. For instance:

Language Models: AI tools like GPT or Google Translate often excel in English but stumble when translating nuanced expressions from less widely spoken languages like Wolof or Pashto.

Facial Recognition Systems: A 2018 study revealed that facial recognition algorithms were up to 34% less accurate for darker-skinned individuals compared to lighter-skinned ones—a consequence of underrepresentation in training datasets.

b. Design Bias: Who Is Building the AI?

Most AI research and development takes place in North America, Europe, and parts of East Asia. The engineers and researchers creating these systems naturally encode their own cultural assumptions into the algorithms, often unintentionally. For example:

AI systems for job applications often favor traits deemed desirable in Western contexts, such as assertiveness or individualism, potentially disadvantaging candidates from cultures that prioritize humility or collectivism.

2. Why Non-Western Contexts Matter

a. The Numbers Don’t Lie

Over 80% of the world’s population lives outside Western countries. If AI models fail to accommodate these regions, they risk becoming irrelevant—or worse, harmful. Imagine an AI healthcare system misdiagnosing an illness because its training data excluded African or South Asian populations.

b. Real-World Impacts

Financial Inclusion: Credit-scoring algorithms trained on Western financial behaviors often misjudge applicants in cash-based economies like India or Kenya.

Education: AI-powered learning tools struggle with non-Latin scripts or culturally specific teaching methods, leaving students underserved.

Agriculture: AI models designed for Western farming practices may not translate to smallholder farms in tropical regions, where conditions and crop types differ drastically.

3. Fixing the Global AI Gap

a. Diverse Data Collection

What I’ve learned is that the solution begins with representation. We need datasets that reflect the diversity of human experiences, languages, and lifestyles. This means investing in:

Localized Data Gathering: Partnering with communities to build culturally relevant datasets.

Multilingual Support: Training language models on underrepresented languages, including dialects.

b. Collaborative Development

To create inclusive AI, we need diverse teams. This includes researchers, developers, and ethicists from various cultural and geographical backgrounds. By incorporating non-Western perspectives into the design process, we can build systems that cater to everyone.

c. Policy and Regulation

Governments and international bodies must play a role in ensuring AI fairness. Policies that mandate transparency, accountability, and diversity in AI development can help mitigate biases.

4. A Philosophical Question: What Should AI Represent?

Should AI strive to mirror the entire world or prioritize efficiency in specific contexts? This debate is crucial as we navigate the future of AI. In my opinion, technology should be a bridge, not a barrier. It should empower all of humanity, not just a privileged subset.

But achieving this requires us to rethink how we define "success" in AI. Is it enough for a model to perform well on benchmarks, or should we measure its ability to serve diverse communities?

Conclusion: Toward a Truly Global AI

AI's failures in non-Western contexts highlight a broader truth: technology reflects the values and priorities of its creators. If we want AI to be a force for global good, we must prioritize inclusivity at every stage, from data collection to model deployment.

What I’ve learned is that the road to equitable AI isn’t easy, but it’s necessary. By addressing these challenges, we can unlock the true potential of AI—one that serves everyone, everywhere.